UOMOP

Performance Comparison according to Data Augmentation 본문

1. 각종 모듈 호출

import os

import cv2

import numpy as np

import pandas as pd

import tensorflow as tf

import matplotlib.pyplot as plt

%matplotlib inline

from sklearn.model_selection import train_test_split

from tensorflow.keras.datasets import cifar10

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Conv2D, BatchNormalization, Activation, MaxPooling2D,\

GlobalAveragePooling2D, Dropout, Dense

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.callbacks import ReduceLROnPlateau, EarlyStopping2. 각종 함수 정의

def make_float(images, labels) :

images = np.array(images, dtype = np.float32)

labels = np.array(labels, dtype = np.float32)

return images, labels

def ohe(labels) :

labels = to_categorical(labels)

return labels

def tr_val_test(train_images, train_labels, test_images, test_labels, val_rate) :

tr_images, val_images, tr_labels, val_labels =\

train_test_split(train_images, train_labels, test_size=val_rate)

return (tr_images, tr_labels), (val_images, val_labels), (test_images, test_labels)

def create_model(tr_images, verbose) :

input_size = (tr_images.shape)[1]

input_tensor = Input(shape = (input_size, input_size, 3))

x = Conv2D(filters=64, kernel_size=(3, 3), padding="same")(input_tensor)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = Conv2D(filters=64, kernel_size=(3, 3), padding="same")(x)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

x = Conv2D(filters=128, kernel_size=(3, 3), padding="same")(x)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = Conv2D(filters=128, kernel_size=(3, 3), padding="same")(x)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

x = Conv2D(filters=256, kernel_size=(3, 3), padding="same")(x)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = Conv2D(filters=256, kernel_size=(3, 3), padding="same")(x)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = Conv2D(filters=512, kernel_size=(3, 3), padding="same")(x)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = GlobalAveragePooling2D()(x)

x = Dropout(rate=0.5)(x)

x = Dense(50, activation="relu")(x)

x = Dropout(rate=0.2)(x)

output = Dense(10, activation="softmax")(x)

model = Model(inputs=input_tensor, outputs=output)

if verbose==True:

model.summary()

return model

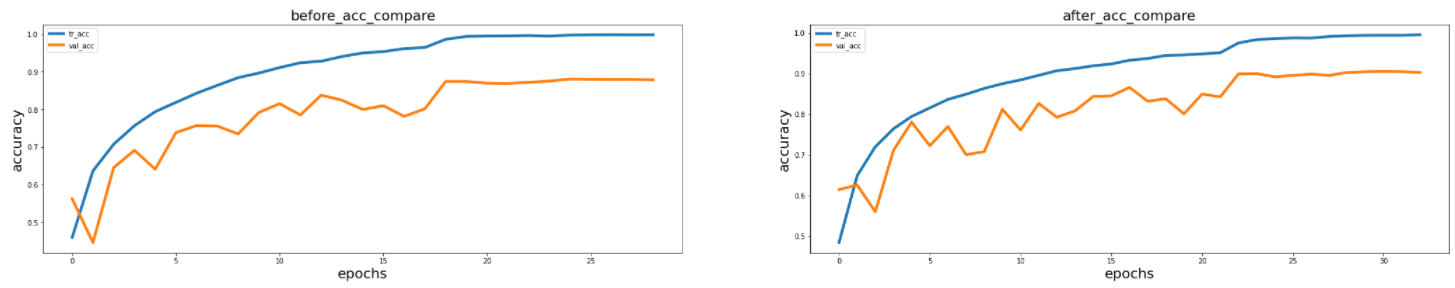

def compare_acc(result_1, result_2) :

fig, axs = plt.subplots(nrows=1, ncols=2, figsize=(36, 6))

axs[0].plot(result_1.history["accuracy"], label="tr_acc", linewidth=4)

axs[0].plot(result_1.history["val_accuracy"], label="val_acc", linewidth=4)

axs[0].set_title("before_acc_compare", fontsize=20)

axs[0].set_xlabel("epochs", fontsize=20)

axs[0].set_ylabel("accuracy", fontsize=20)

axs[0].legend()

axs[1].plot(result_2.history["accuracy"], label="tr_acc", linewidth=4)

axs[1].plot(result_2.history["val_accuracy"], label="val_acc", linewidth=4)

axs[1].set_title("after_acc_compare", fontsize=20)

axs[1].set_xlabel("epochs", fontsize=20)

axs[1].set_ylabel("accuracy", fontsize=20)

axs[1].legend()

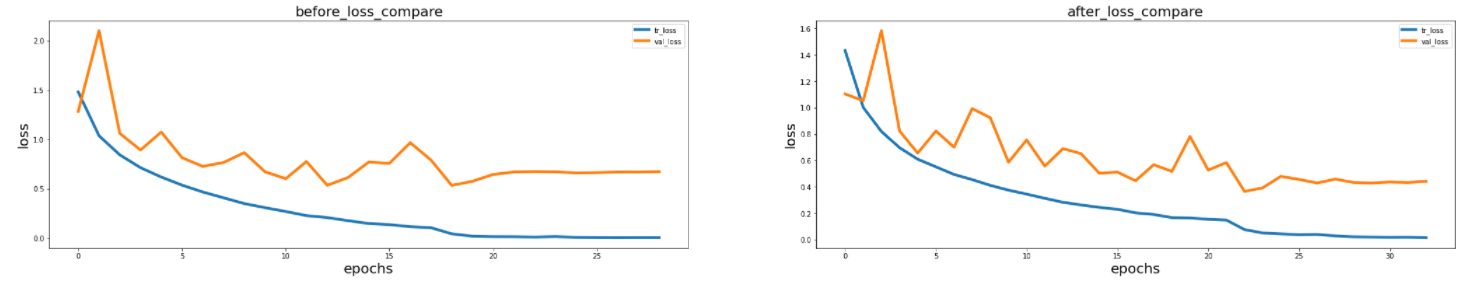

def compare_loss(result_1, result_2) :

fig, axs = plt.subplots(nrows=1, ncols=2, figsize=(36, 6))

axs[0].plot(result_1.history["loss"], label="tr_loss", linewidth=4)

axs[0].plot(result_1.history["val_loss"], label="val_loss", linewidth=4)

axs[0].set_title("before_loss_compare", fontsize=20)

axs[0].set_xlabel("epochs", fontsize=20)

axs[0].set_ylabel("loss", fontsize=20)

axs[0].legend()

axs[1].plot(result_2.history["loss"], label="tr_loss", linewidth=4)

axs[1].plot(result_2.history["val_loss"], label="val_loss", linewidth=4)

axs[1].set_title("after_loss_compare", fontsize=20)

axs[1].set_xlabel("epochs", fontsize=20)

axs[1].set_ylabel("loss", fontsize=20)

axs[1].legend()

plt.show()

def check_images(images, labels, how_many) :

NAMES = np.array(["airplane", "automobile", "bird", "cat", "deer", "dog", "frog", "horse",\

"ship", "truck"])

fig, axs = plt.subplots(nrows=1, ncols=how_many, figsize=(22, 6))

for i in range(how_many) :

axs[i].imshow(images[i])

axs[i].set_title(np.squeeze(NAMES[labels[i]]))

axs[i].axis("off")data의 normalization을 항상 함수로 정의해주어, 255를 나눈 값을 사용하였지만, 이번 예제에서는 ImageDataGenerator를 사용하여 scaling을 해보도록 한다.

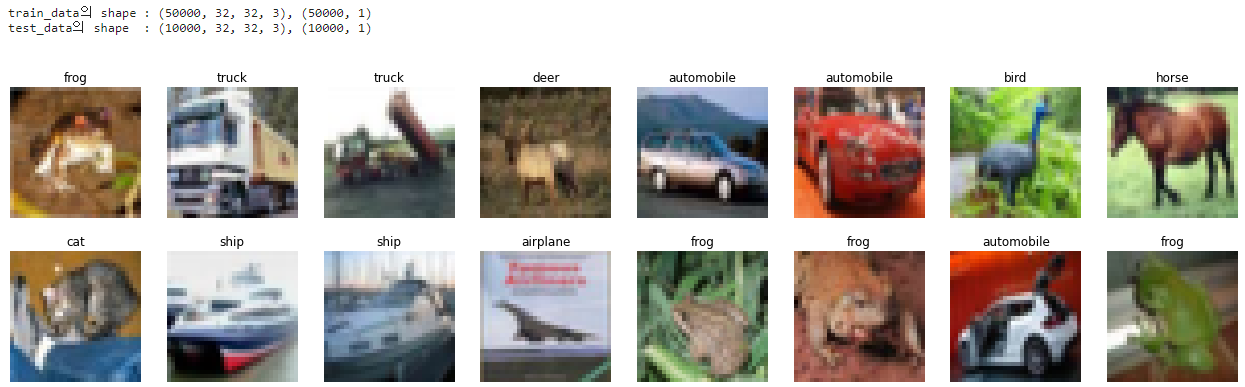

3. cifar10 데이터 확인

(train_images, train_labels), (test_images, test_labels) = cifar10.load_data()

print("train_data의 shape : {}, {}".format(train_images.shape, train_labels.shape))

print("test_data의 shape : {}, {}\n\n".format(test_images.shape, test_labels.shape))

check_images(train_images, train_labels, how_many=8)

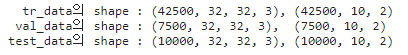

check_images(test_images, test_labels, how_many=8)4. 데이터 전처리(Data Preprocessing)

train_images, train_labels = make_float(train_images, train_labels)

test_images, test_labels = make_float(test_images, test_labels)

train_labels = ohe(train_labels)

test_labels = ohe(test_labels)

(tr_images, tr_labels), (val_images, val_labels), (test_images, test_labels) = \

tr_val_test(train_images, train_labels, test_images, test_labels, val_rate=0.15)

print(" tr_data의 shape : {}, {}".format(tr_images.shape, tr_labels.shape))

print(" val_data의 shape : {}, {}".format(val_images.shape, val_labels.shape))

print("test_data의 shape : {}, {}".format(test_images.shape, test_labels.shape))5. CNN model 생성

model_before = create_model(tr_images, verbose=True)

model_before.compile(optimizer=Adam(learning_rate=0.001), loss="categorical_crossentropy", metrics=["accuracy"])

model_after = create_model(tr_images, verbose=True)

model_after.compile(optimizer=Adam(learning_rate=0.001), loss="categorical_crossentropy", metrics=["accuracy"])6. ImageDataGenerator

BATCH_SIZE = 64

tr_generator = ImageDataGenerator(horizontal_flip=True, rescale=1/255.0)

val_generator = ImageDataGenerator(rescale=1/255.0)

test_generator = ImageDataGenerator(rescale=1/255.0)

flow_tr_gen = tr_generator.flow(tr_images, tr_labels, batch_size=BATCH_SIZE, shuffle=True)

flow_val_gen = val_generator.flow(val_images, val_labels, batch_size=BATCH_SIZE, shuffle=False)

flow_test_gen = test_generator.flow(test_images, test_labels, batch_size=BATCH_SIZE, shuffle=False)7. callback 생성 및 학습

rlr_cb = ReduceLROnPlateau(monitor="val_loss", mode="min", factor=0.2, patience=5, verbose=True)

ely_cb = EarlyStopping(monitor="val_loss", mode="min", patience=10, verbose=True )

result_before = model_before.fit(tr_images, tr_labels, batch_size=64, epochs=40, shuffle=True,\

validation_data=(val_images, val_labels), callbacks=[rlr_cb, ely_cb])

result_after = model_after.fit(flow_tr_gen, epochs=40, validation_data=flow_val_gen,\

callbacks=[rlr_cb, ely_cb])8. 성능 비교(tr_data, val_data)

compare_acc(result_before, result_after)

compare_loss(result_before, result_after)데이터 증강이 이루어진 후에 overfitting이 어느정도 개선된 것을 확인할 수 있었다.

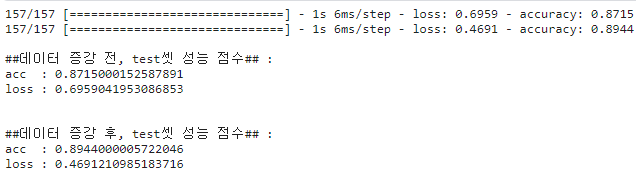

9. 성능 비교(test_data)

eval_before = model_before.evaluate(test_images, test_labels, batch_size=BATCH_SIZE)

eval_after = model_after.evaluate(flow_test_gen)

print("\n##데이터 증강 전, test셋 성능 점수## : \nacc : {}\nloss : {}\n\n".format(eval_before[1], eval_before[0]))

print("##데이터 증강 후, test셋 성능 점수## : \nacc : {}\nloss : {}".format(eval_after[1], eval_after[0]))3번의 실험을 진행하였다.

3번 모두 데이터 증강한 후의 성능이 더 좋아진 것을 확인할 수 있었다.

'Ai > DL' 카테고리의 다른 글

| My model vs VGG16 (0) | 2022.02.14 |

|---|---|

| Pixel value "Normalization" (0) | 2022.02.10 |

| Data Augmentation(픽셀 기반) (0) | 2022.02.10 |

| Data Augmentation(공간 기반) (0) | 2022.02.10 |

| Data Augmentation(데이터 증강) 기본 (0) | 2022.02.10 |

Comments