목록DE (34)

UOMOP

DE : Selection (33%, 60%, 75%)

DE : Selection (33%, 60%, 75%)

import torchvision.transforms as transformsimport mathimport torchimport torchvisionimport torch.nn as nnimport torch.optim as optimfrom torch.utils.data import DataLoader, Datasetimport timeimport osfrom tqdm import tqdmimport numpy as npimport mathimport torchimport torchvisionfrom fractions import Fractionimport numpy as npimport torch.nn as nnimport torch.optim as optimimport torch.nn.functi..

Masked, Reshaped, index Gen

Masked, Reshaped, index Gen

import torchimport numpy as npimport matplotlib.pyplot as pltimport torchvision.transforms as transformsfrom torchvision.datasets import CIFAR10from torch.utils.data import DataLoaderimport torchimport numpy as npimport matplotlib.pyplot as pltfrom torchvision import datasets, transformsdef patch_importance(image, patch_size=2, type='variance', how_many=2): if isinstance(image, torch.Tensor):..

DIM=256 : [17.638, 21.318, 24.505, 25.997, 26.248]DIM=512 : [18.781, 22.801, 26.817, 29.227, 30.021]DIM=1024 : [19.958, 23.363, 28.337, 30.97, 33.79]

Proposed Network Architecture

Proposed Network Architecture

import torchimport torch.nn as nnclass Encoder(nn.Module): def __init__(self, latent_dim): super(Encoder, self).__init__() self.latent_dim = latent_dim self.in1 = nn.Conv2d(3, 32, kernel_size=5, stride=1, padding=0) self.in2 = nn.Conv2d(3, 32, kernel_size=5, stride=1, padding=0) self.in3 = nn.Conv2d(3, 32, kernel_size=5, stride=1, padding=0) self.in4 ..

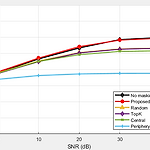

Performance (Patch size = 1)

Performance (Patch size = 1)

% SNRdB 값 설정snrdb = [0, 10, 20, 30, 40];% PSNR 값 데이터 초기화data.NoMasking = struct('x1024', [19.958 25.263 28.937 31.97 33.79], ... 'x512', [19.051 23.277 26.696 29.33 29.956], ... 'x256', [17.897 21.718 24.645 26.202 26.54]);data.CBM = struct('x1024', struct('x0_23', [20.571 24.169 28.232 31.348 33.606], ... 'x0_43', [20..

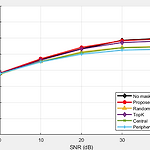

Performance (Patch size = 2)

Performance (Patch size = 2)

% SNRdB 값 설정snrdb = [0, 10, 20, 30, 40];% PSNR 값 데이터 초기화data.NoMasking = struct('x1024', [19.958 25.263 28.937 31.97 33.79], ... 'x512', [19.051 23.277 26.696 29.33 29.956], ... 'x256', [17.897 21.718 24.645 26.202 26.54]);data.CBM = struct('x1024', struct('x0_23', [20.485 25.515 29.246 32.048 33.168], ... 'x0_43', [20..