목록DE/Code (18)

UOMOP

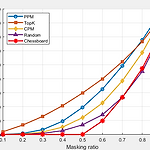

DIM=256 : [17.638, 21.318, 24.505, 25.997, 26.248]DIM=512 : [18.781, 22.801, 26.817, 29.227, 30.021]DIM=1024 : [19.958, 23.363, 28.337, 30.97, 33.79]

Proposed Network Architecture

Proposed Network Architecture

import torchimport torch.nn as nnclass Encoder(nn.Module): def __init__(self, latent_dim): super(Encoder, self).__init__() self.latent_dim = latent_dim self.in1 = nn.Conv2d(3, 32, kernel_size=5, stride=1, padding=0) self.in2 = nn.Conv2d(3, 32, kernel_size=5, stride=1, padding=0) self.in3 = nn.Conv2d(3, 32, kernel_size=5, stride=1, padding=0) self.in4 ..

class Decoder(nn.Module): def __init__(self, latent_dim): super(Decoder, self).__init__() self.latent_dim = latent_dim self.linear = nn.Linear(self.latent_dim, 2048) self.prelu = nn.PReLU() self.unflatten = nn.Unflatten(1, (32, 8, 8)) self.essen = nn.ConvTranspose2d(32, 16, kernel_size=5, stride=2, padding=1, output_padding=1) self.in6 = nn.Co..

import torchimport torch.nn as nnclass Encoder(nn.Module): def __init__(self, latent_dim): super(Encoder, self).__init__() self.latent_dim = latent_dim self.in1 = nn.Conv2d(3, 16, kernel_size=5, stride=1, padding=1) self.in2 = nn.Conv2d(3, 16, kernel_size=5, stride=1, padding=1) self.in3 = nn.Conv2d(3, 16, kernel_size=5, stride=1, padding=1) self.in4 ..

Proposed net

Proposed net

import mathimport torchimport torchvisionfrom fractions import Fractionimport numpy as npimport torch.nn as nnimport torch.optim as optimimport torch.nn.functional as fimport matplotlib.pyplot as pltimport torchvision.transforms as trfrom torchvision import datasetsfrom torch.utils.data import DataLoader, Datasetimport timeimport osfrom skimage.metrics import structural_similarity as ssimfrom pa..

Filter counting of zero padding

Filter counting of zero padding

import numpy as npimport torchimport torchvisionimport torchvision.transforms as transformsdef patch_importance(image, patch_size=2, type='variance', how_many=2, noise_scale=0): if isinstance(image, torch.Tensor): image = image.numpy() H, W = image.shape[-2:] extended_patch_size = patch_size + 2 * how_many value_map = np.zeros((H // patch_size, W // patch_size)) for i in ra..