UOMOP

Cifar10 Rayleigh with SSIM 본문

import math

import torch

import random

import torchvision

import torch.nn as nn

from tqdm import tqdm

import torch.optim as optim

import torch.nn.functional as f

import matplotlib.pyplot as plt

import torchvision.transforms as tr

from torch.utils.data import DataLoader

import numpy as np

import torchvision.transforms as transforms

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

args = {

'BATCH_SIZE' : 50,

'LEARNING_RATE' : 0.001,

'NUM_EPOCH' : 20,

'SNR_dB' : [1, 10, 20],

'latent_dim' : 500,

'input_dim' : 32 * 32

}

transf = tr.Compose([tr.ToTensor(), tr.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# 원래 pytorch cifar10은 0~1사이의 값을 가진다.

# -1~1로 정규화를 시켜준다.

trainset = torchvision.datasets.CIFAR10(root = './data', train = True, download = True, transform = transf)

testset = torchvision.datasets.CIFAR10(root = './data', train = False, download = True, transform = transf)

trainloader = DataLoader(trainset, batch_size = args['BATCH_SIZE'], shuffle = True)

testloader = DataLoader(testset, batch_size = args['BATCH_SIZE'], shuffle = True)

def SSIM(x, y):

# assumption : x and y are grayscale images with the same dimension

import numpy as np

def mean(img):

return np.mean(img)

def sigma(img):

return np.std(img)

def cov(img1, img2):

img1_ = np.array(img1[:,:], dtype=np.float64)

img2_ = np.array(img2[:,:], dtype=np.float64)

return np.mean(img1_ * img2_) - mean(img1) * mean(img2)

K1 = 0.01

K2 = 0.03

L = 2 # when each pixel spans 0 to 255

C1 = K1 * K1 * L * L

C2 = K2 * K2 * L * L

C3 = C2 / 2

l = (2 * mean(x) * mean(y) + C1) / (mean(x)**2 + mean(y)**2 + C1)

c = (2 * sigma(x) * sigma(y) + C2) / (sigma(x)**2 + sigma(y)**2 + C2)

s = (cov(x, y) + C3) / (sigma(x) * sigma(y) + C3)

return l * c * s

def transmit_img_AE(model_name, SNRdB, testloader) :

model = Autoencoder()

model.load_state_dict(torch.load(model_name))

fig = plt.figure()

batch_size = args['BATCH_SIZE']

for data in testloader :

inputs = data[0]

outputs = model(inputs, SNRdB = SNRdB)

break

rand_num = random.randrange(batch_size)

plt.subplot(1, 2, 1)

plt.imshow( inputs[rand_num].permute(1, 2, 0).detach().numpy())

plt.title('Original img')

plt.subplot(1, 2, 2)

plt.imshow(outputs[rand_num].permute(1, 2, 0).detach().numpy())

plt.title('Decoded img')

def PSNR_test(model_name, testloader) :

if model_name[-6] == '=' :

SNRdB = int(model_name[-5])

else :

SNRdB = int(model_name[-6] + model_name[-5])

model_1 = Autoencoder().to(device)

model_1.load_state_dict(torch.load(model_name))

PSNR_list = []

for data in testloader :

inputs = data[0].to(device)

outputs = model_1( inputs, SNRdB = SNRdB)

for j in range(len(inputs)) :

PSNR = 0

for k in range(3) :

MSE = torch.sum(torch.pow(inputs[j][k] - outputs[j][k], 2)) / args['input_dim']

PSNR += 10 * math.log10(1 / MSE)

PSNR_list.append(PSNR / 3)

print("PSNR : {}".format(round(sum(PSNR_list) / len(PSNR_list), 2)))

return round(sum(PSNR_list) / len(PSNR_list), 2)

def compare_PSNR(SNRdB_list, model_name_without_SNRdB, testloader) :

PSNR_list = []

for i in range(len(SNRdB_list)) :

SNRdB = SNRdB_list[i]

model_name = model_name_without_SNRdB + "_SNR=" + str(SNRdB) + ".pth"

PSNR = PSNR_test(model_name, testloader)

PSNR_list.append(PSNR)

plt.plot(args['SNR_dB'], PSNR_list, linestyle = 'dashed', color = 'blue', label = "Rayleigh")

plt.grid(True)

plt.legend()

plt.show()

def model_train(SNRdB_list, learning_rate, epoch_num, trainloader) :

for i in range( len(args['SNR_dB']) ):

model = Autoencoder().to(device)

criterion = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr = learning_rate)

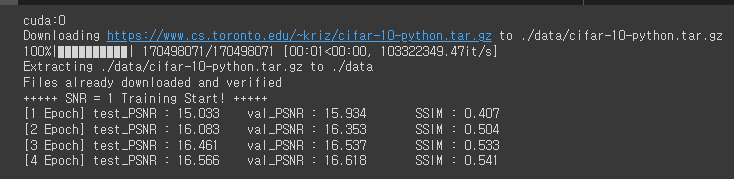

print("+++++ SNR = {} Training Start! +++++\t".format(args['SNR_dB'][i]))

for epoch in range(epoch_num) :

running_loss = 0.0

running_ssim = 0.0

for data in trainloader :

inputs = data[0].to(device)

gray_inputs = transforms.Grayscale()(inputs)

optimizer.zero_grad()

outputs = model( inputs, SNRdB = args['SNR_dB'][i])

gray_outputs = transforms.Grayscale()(outputs)

loss = criterion(inputs, outputs)

loss.backward()

optimizer.step()

running_loss += loss.item()

for k in range(len(gray_inputs)) :

running_ssim += SSIM(gray_inputs[k].detach().cpu().numpy().squeeze(), gray_outputs[k].detach().cpu().numpy().squeeze())

train_loss = running_loss/len(trainloader)

train_ssim = running_ssim/(len(gray_inputs)*len(trainloader))

running_loss = 0.0

for data in testloader :

inputs = data[0].to(device)

outputs = model( inputs, SNRdB = args['SNR_dB'][i])

loss = criterion(inputs, outputs)

running_loss += loss.item()

test_loss = running_loss/len(testloader)

print("[{} Epoch] test_PSNR : {}\tval_PSNR : {}\tSSIM : {}".format(epoch + 1, round(10 * math.log10(4 / train_loss), 3), round(10 * math.log10(4 / test_loss), 3), round(train_ssim, 3)))

print()

PATH = "./"

torch.save(model.state_dict(), PATH + "model_AWGN(color)" + "_SNR=" + str(args['SNR_dB'][i]) +".pth")

class Encoder(nn.Module):

def __init__(self):

super(Encoder, self).__init__()

c_hid = 32

self.encoder = nn.Sequential(

nn.Conv2d(3, c_hid, kernel_size=3, padding=1, stride=2), # 32x32 => 16x16

nn.ReLU(),

nn.Conv2d(c_hid, c_hid, kernel_size=3, padding=1),

nn.ReLU(),

nn.Conv2d(c_hid, 2 * c_hid, kernel_size=3, padding=1, stride=2), # 16x16 => 8x8

nn.ReLU(),

nn.Conv2d(2 * c_hid, 2 * c_hid, kernel_size=3, padding=1),

nn.ReLU(),

nn.Conv2d(2 * c_hid, 2 * c_hid, kernel_size=3, padding=1, stride=2), # 8x8 => 4x4

nn.ReLU(),

nn.Flatten(), # Image grid to single feature vector

nn.Linear(2 * 16 * c_hid, args['latent_dim'])

)

def forward(self, x):

return self.encoder(x)

class Decoder(nn.Module):

def __init__(self):

super(Decoder, self).__init__()

c_hid = 32

self.linear = nn.Sequential(

nn.Linear(args['latent_dim'], 2 * 16 * c_hid),

nn.ReLU()

)

self.decoder = nn.Sequential(

nn.ConvTranspose2d(2 * c_hid, 2 * c_hid, kernel_size=3, output_padding=1, padding=1, stride=2), # 4x4 => 8x8

nn.ReLU(),

nn.Conv2d(2 * c_hid, 2 * c_hid, kernel_size=3, padding=1),

nn.ReLU(),

nn.ConvTranspose2d(2 * c_hid, c_hid, kernel_size=3, output_padding=1, padding=1, stride=2), # 8x8 => 16x16

nn.ReLU(),

nn.Conv2d(c_hid, c_hid, kernel_size=3, padding=1),

nn.ReLU(),

nn.ConvTranspose2d(c_hid, 3, kernel_size=3, output_padding=1, padding=1, stride=2), # 16x16 => 32x32

)

def forward(self, x):

x = self.linear(x)

x = x.reshape(x.shape[0], -1, 4, 4)

decoded = self.decoder(x)

return decoded

class Autoencoder(nn.Module):

def __init__(

self,

encoder_class: object = Encoder,

decoder_class: object = Decoder

):

super(Autoencoder, self).__init__()

self.encoder = encoder_class()

self.decoder = decoder_class()

def Rayleigh(self, input, SNRdB):

normalized_tensor = f.normalize(input, dim=1)

SNR = 10.0 ** (SNRdB / 10.0)

K = args['latent_dim']

std = 1 / math.sqrt(K * SNR)

h = (np.sqrt(torch.normal(0, 1, size=normalized_tensor.size())**2 + torch.normal(0, 1, size=normalized_tensor.size())**2) / np.sqrt(2)).to(device)

n = torch.normal(0, std, size=normalized_tensor.size()).to(device)

return normalized_tensor + n/h

def forward(self, x, SNRdB):

encoded = self.encoder(x)

#print("encoded : {}".format(encoded[0]))

#print("encoded size : {}".format(encoded.size()))

encoded_AWGN = self.Rayleigh(encoded, SNRdB)

#print("encoded_AWGN size : {}".format(encoded_AWGN.size()))

decoded = self.decoder(encoded_AWGN)

#print("decoded : {}".format(decoded[0]))

#print("decoded size : {}".format(decoded.size()))

return decoded

SNRdB_list = args['SNR_dB']

learning_rate = args['LEARNING_RATE']

epoch_num = args['NUM_EPOCH']

trainloader = trainloader

model_name_without_SNRdB = 'model_AWGN(color)'

model_train(SNRdB_list, learning_rate, epoch_num, trainloader)

'Wireless Comm. > CISL' 카테고리의 다른 글

| Cifar10 AWGN [1dB, 10dB, 20dB] (0) | 2023.07.18 |

|---|---|

| Cifar10 Rayleigh [1dB 10dB 20dB] (0) | 2023.07.11 |

| Low Pass Filtering using cv2 (0) | 2023.07.11 |

| Cifar10 Autoencoder 20230710 Rayleigh (0) | 2023.07.10 |

| Basic AutoEncoder using Cifar10 (0) | 2023.06.10 |

Comments